Hacker News Generator

How to generate new Hacker News titles on Google Cloud

The time was 1:30PM, I was just coming back from a lunch break at the office with some spare time before my next meeting.

«Let’s just take a quick look at Hacker News before getting back to work»

I remember one of the top post being “My business card runs Linux” (link), I’ve seen this exact same one a few times and couldn’t help thinking this particular title was very «HackerNews-y».

What if we had a script generate new titles from a corpus of all previous HN titles, how far would the apple land from the tree?

(note please that my goal here is not to point the finger at this particular post, I was actually interested in the challenges of running linux on a system as small as a business card)

Let’s do it!

The proof of concept

Obtaining the data

First things first, I need to make sure I can get ahold of previous Hacker News data I could build onto 🧐

Fortunately I had the intuition these would be accessible in a public BigQuery dataset.

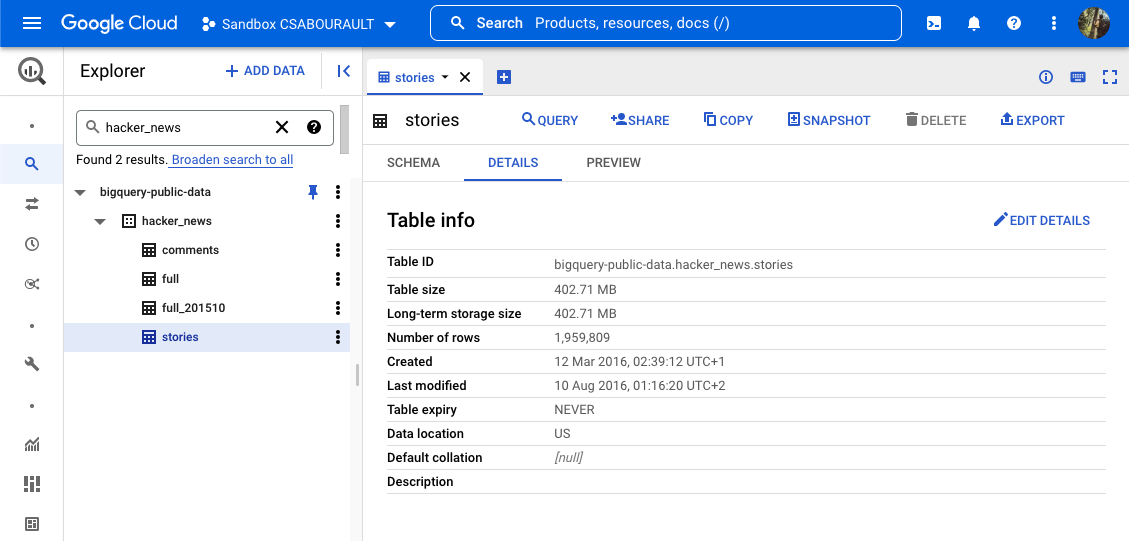

After a bit of searching, I found bigquery-public-data.hacker_news.stories!

BigQuery is a cloud-based data warehouse with an easily accessible query engine API.

In a typical project, you would use BigQuery to store any long-term data from your applications, such as logs, that you would then use for analytics, or any application data that you archive.

To showcase its impressive request engine on large datasets, BigQuery also allows you to query public datasets from any user or company.

As you can see on this screenshot, the whole dataset holds 1,959,808 records of stories posted between October 2005 and October 2015!

After a bit of data exploration, I decided to extract only the posts with a score above 100 upvotes to restrict the titles I would be working with.

With a very simple SQL query I got myself around 43,000 lines of data out of the nearly 2 millions, which BigQuery output in under a single second, not too bad!

As you can see we can use standard SQL with BigQuery, nothing fancy. Using the bq command line, I ran this query and extracted all titles separated by newlines into a local CSV file.

The logic

Now my plan was to generate new sentences from this corpus using Markov chains.

Markov chains are relatively simple systems to generate sequences of random tokens based on a simple rule:

- The first S words in the sentence are always randomly picked from a row in the corpus.

- To pick the next word in a sequence, you look at the S previous words.

The number of previous words you keep in memory is the state.

Using 2 previous words to pick a 3rd one makes your state size of 2.

By increasing the size of your state you can avoid jumping from totally unrelated sequences of words. However, you will be decreasing the number of possible outcomes.

To keep the sentences less random, since the titles were already using some very precise technical jargon, I settled with a size of 3.

And now we will do a very pythonic thing and avoid reinventing the wheel: let's import Markovify to handle all this for us 😄

And here’s an exemple totally new sentences from HackerNews:

“Client-side full text search in milliseconds with PostgreSQL”

At this point I had my proof of concept and in under 20 minutes.

Now, my next step was to host this piece of code so I can share it with colleagues, but I need to make sure I wouldn’t lose too much time and money in the process.

Having the Proof of Concept for title generation as a python function, my natural choice was to push it to Cloud Functions.

Cloud Functions is a perfect destination for such use-cases:

You work locally focusing only on your code’s functionality and not on the surrounding setup.

Once ready you can deploy online with a single command line as easy as:

My project was online and accessible through an https URL! Calling it, anyone can now generate new titles each time they hit refresh:

“Why we killed our startup in 100 days”

Cool and all, but I can see a small problem: latency.

As a matter of fact, Cloud Functions are started and stopped as needed.

So the whole app, especially everything that’s outside of the get_title function scope, such as markovify.NewlineText, would be instanciated right when a user loads the page, taking several seconds to load the 40k lines of corpus into memory, and only to be destroyed when their new title was generated, leaving this process to be repeated everytime a user would call the page. :(

To solve this issue, I would only need to load the model into memory once and make all following requests reuse it.

There are several ways to do this, but my favorite one is to transform our simple python function into a python webserver that could load the markov model at startup and execute user requests in parallel.

Enter functions-framework!

Functions-framework will wrap your python function inside a pre-configured Flask webserver, so that I don’t have to handle configuration myself.

To run your new app locally first, all you have to do is:

And voilà! Your function is now accessible at localhost:8080 just like it would in Cloud Functions. Except this time it won’t by instanciated and destroyed on a per-user basis.

By also adding this simple Dockerfile next to my main.py file, I could build my webserver into a container that includes functions-framework!

If you have a working python function and no webserver configured you can simply copy-paste this exact Dockerfile.

My directory is as simple as this:

Next step is to deploy our new container onto a service that was specially made for this: Cloud Run.

“Why Dart is not the language of choice for data science”

Our service is back up and now faster than ever 😎

Cloud Run is fantastic! Think running your applications flexibly like in Kubernetes and deploying as easily as Cloud Functions.

No underlying infrastructure to provide, configure and maintain up-to-date!

You just build your code into a container (and we’ve just seen how easy it was) and then referencing your newly-built image name.

Cloud Run provides scale-to-zero capability, which means once my service stops receiving request for a few minutes the container will entirely stop, saving both money and energy.

The only drawback to this, is the first request to hit my service back after a scale-down event has to wait until the container comes back up, which in our case can be a few seconds long. We call this a cold-boot.

Fortunately, once instanciated for any single request our container will stay warm for all following requests, with our markov text-model retained in memory and ready to serve the next sentence instantly!

Lessons learned

Optimizations

I think compiling the markov model once and baking it as a “pickle” in the container itself would even faster.

This way we would avoid the cold boot we get when a user first calls the app after some idle time.

Some fun facts

- Because the HN data ends mid-2015, not a single line of the corpus is about web3, we get “Bitcoin” very rarely.

- From idea to deployment on Cloud Run took me around 30 minutes, no time wasted on office hours 😇

- After the core feature of generating titles, I spent some time fiddling with TailwindCSS to make it more attractive. (that’s where I may have spent office time but let’s call it self-training instead)

- The whole project was developed using neovim and the NvChad config as a way to test features.

Source is available on this github repo.

Screenshots

Some screenshots from the final version of the app, accessible at hntitlegen-uanwpkxudq-ew.a.run.app if you want to test it yourself :)